How To Provide Elasticity Storage To Hadoop Slave From LVM (Logical Volume Management) ? BigData

Today I tell you about one more important and awesome concept, LVM (Logical Volume Management)

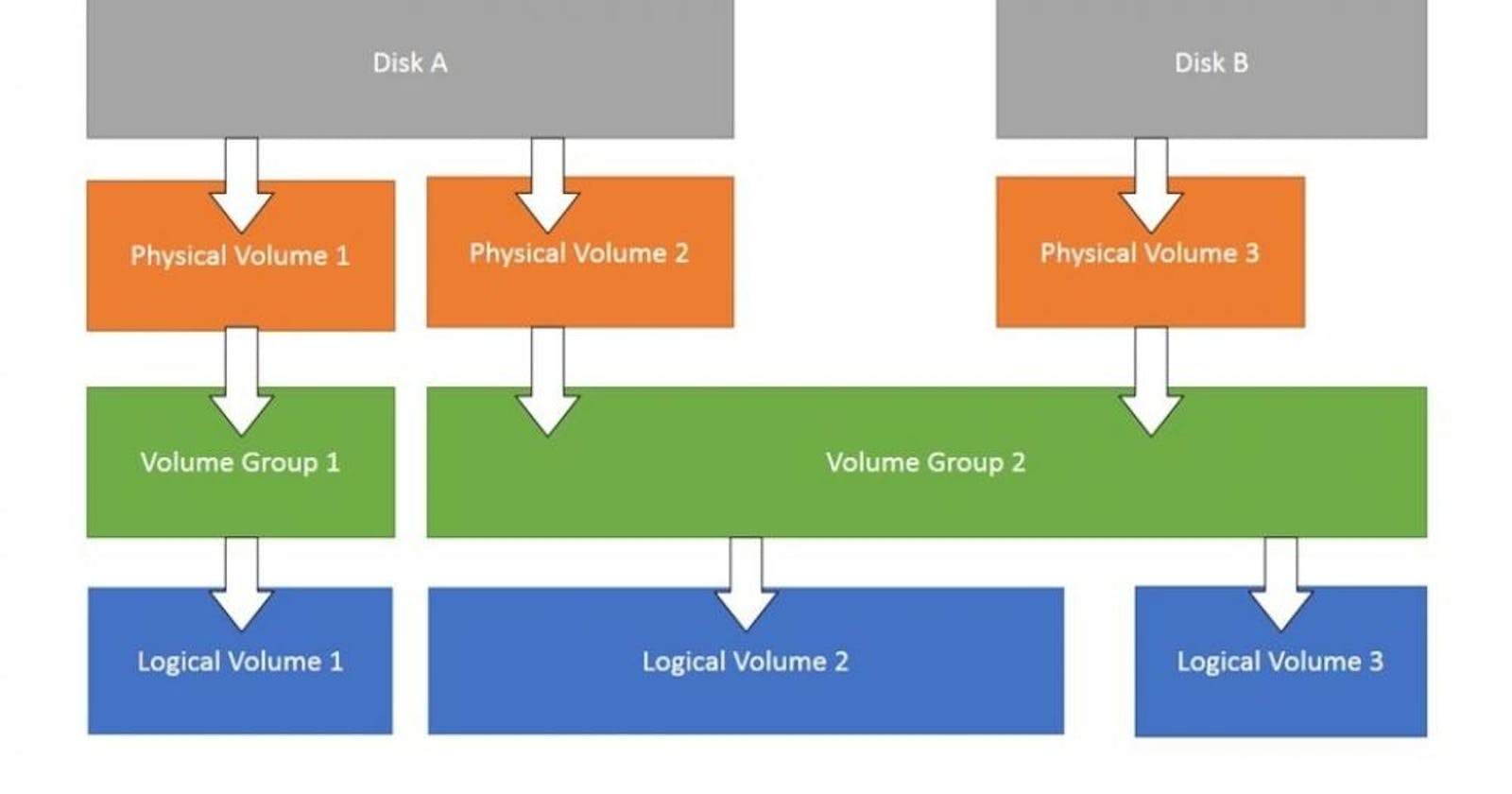

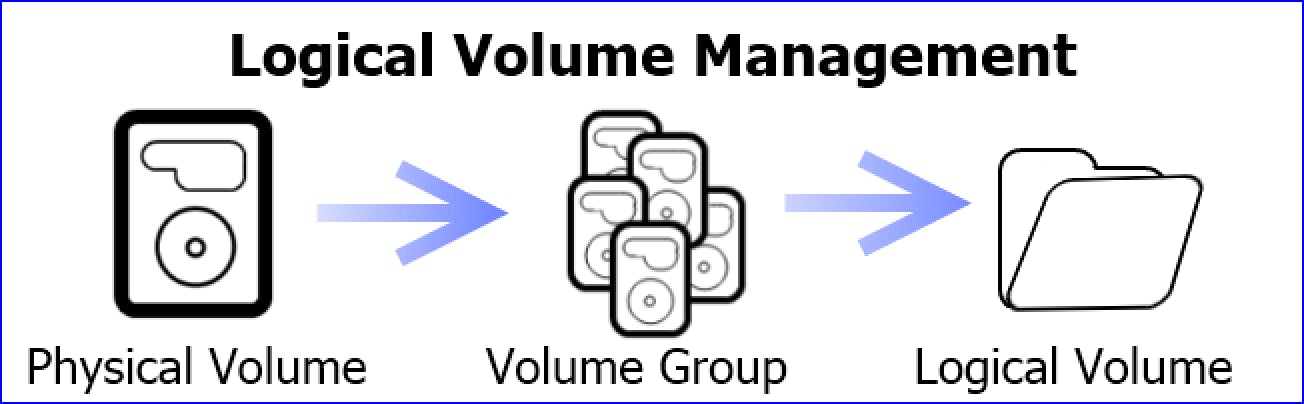

What is LVM ?

- LVM allows for very flexible disk space management.

- It provides features like the ability to add disk space to a logical volume and its filesystem

- while that filesystem is mounted and active and it allows for the collection of multiple physical hard drives and partitions into a single volume group

- which can then be divided into logical volumes.

- The volume manager also allows reducing the amount of disk space allocated to a logical volume, but there are a couple requirements.

- First, the volume must be unmounted.

- Second, the filesystem itself must be reduced in size before the volume on which it resides can be reduced.

So Let's Get Started

I love to do work in steps, so let's do this in step also !

Steps:-

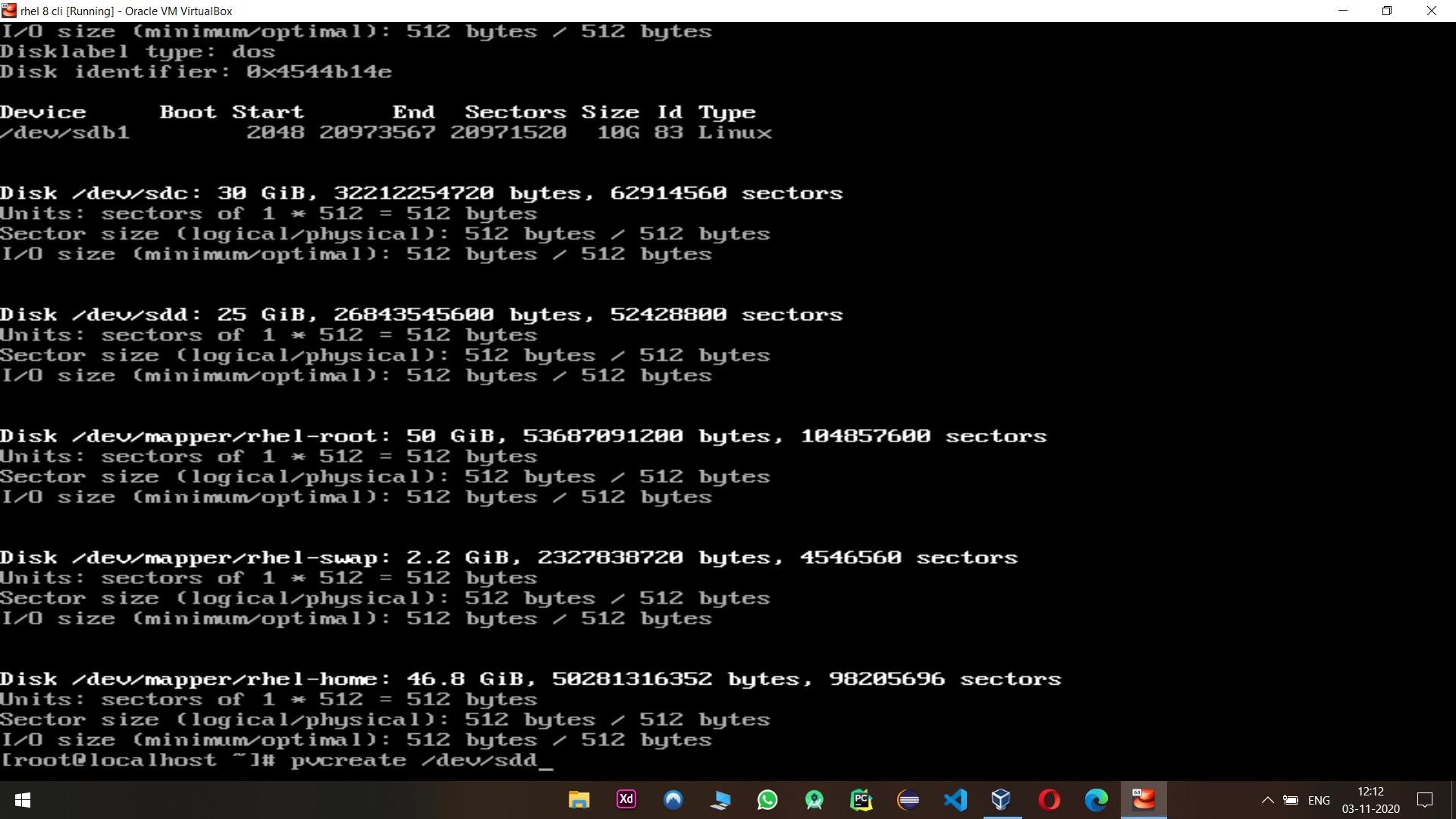

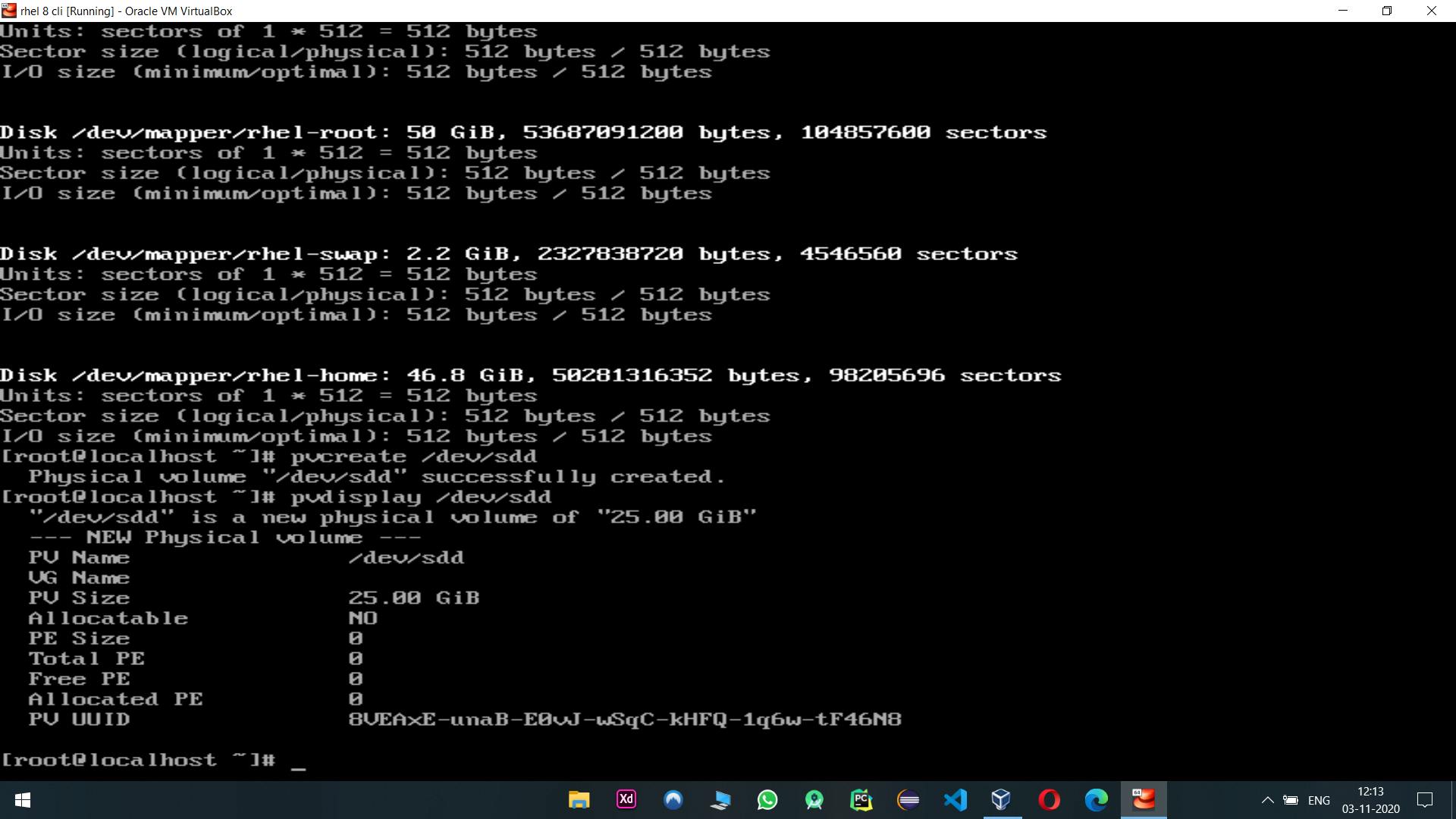

- First we add our storage disk to our system, here 30 GiB and 25 Gib is our storage disk

- Now we create Physical Volume

pvcreate /dev/sdc (To Convert disk into Physical Volume)

- After Applying same on second disk to create PV

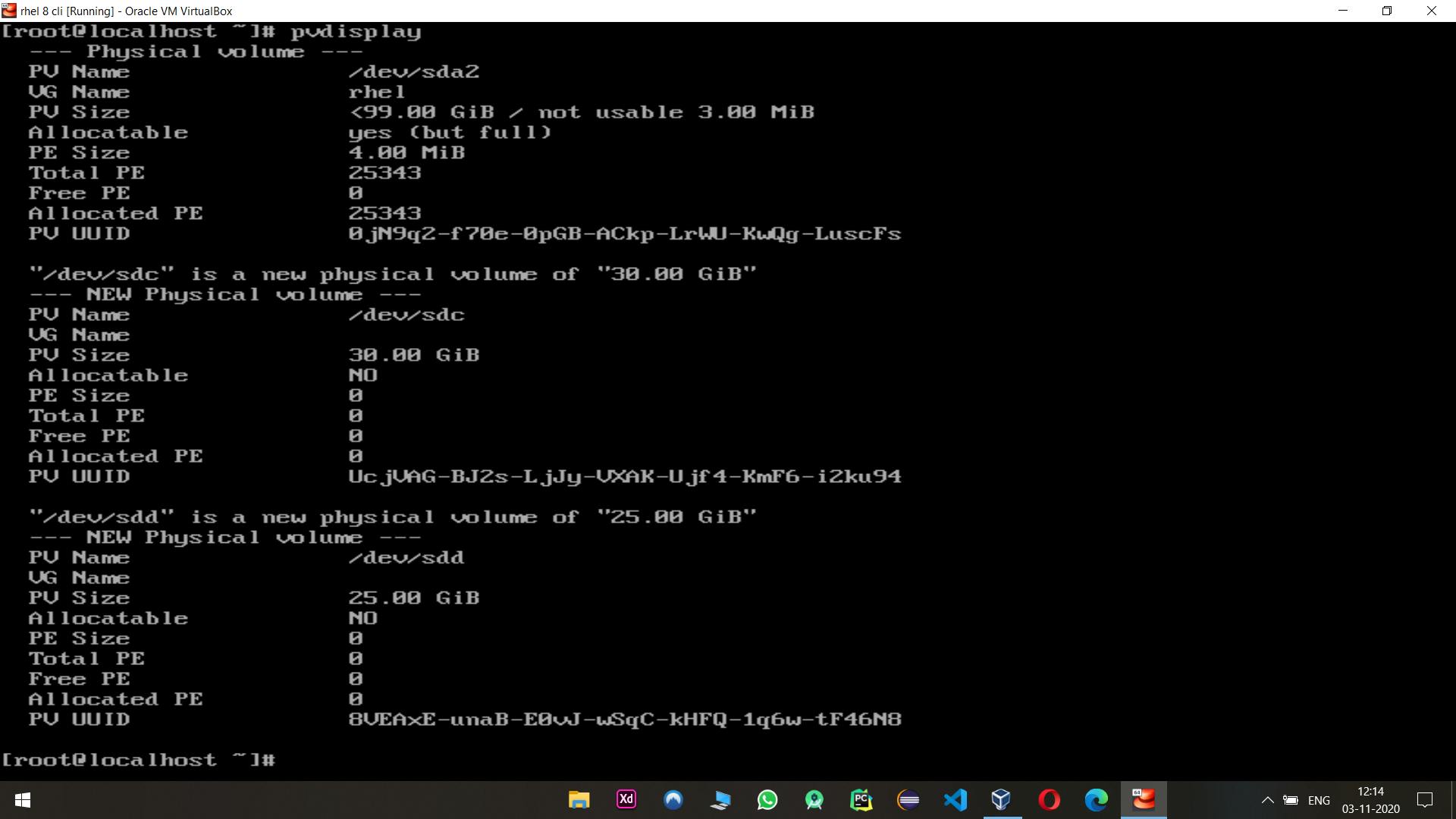

now let's info about Physical volume

cmd:- pvdisplay

- After that we create Volume Group

cmd:- vgcreate hadoop_namenode (name of vg) /dev/sdv /dev/sdd (name of pv)

to check info of vg

cmd:- vgdisplay hadoop_namenode (name of vg)

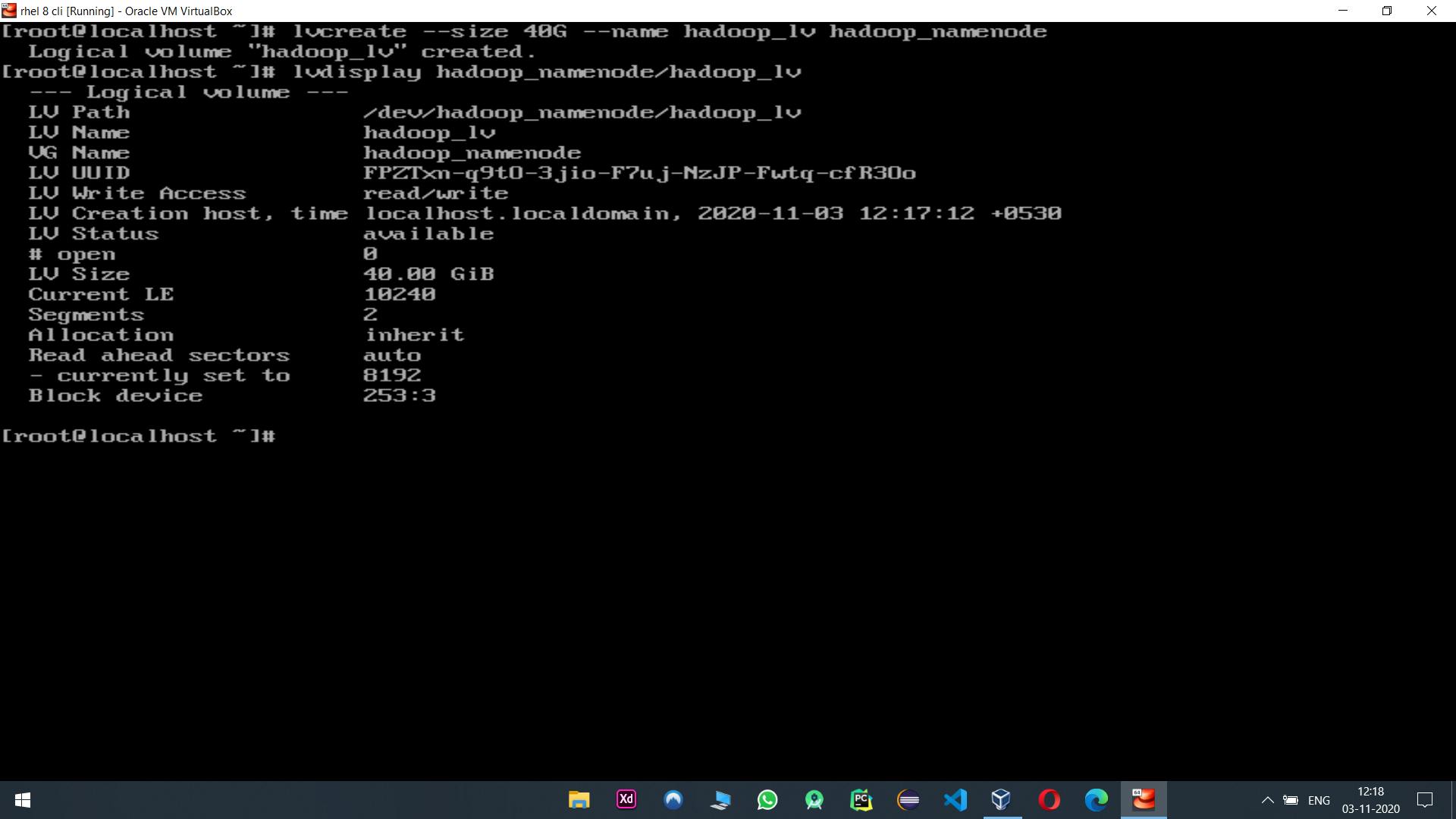

- Now After this we create our main Logical Volume

cmd:- lvcreate --size 40G (size of lv) --name hadoop_lv (name of lv) hadoop_namenode (name of vg)

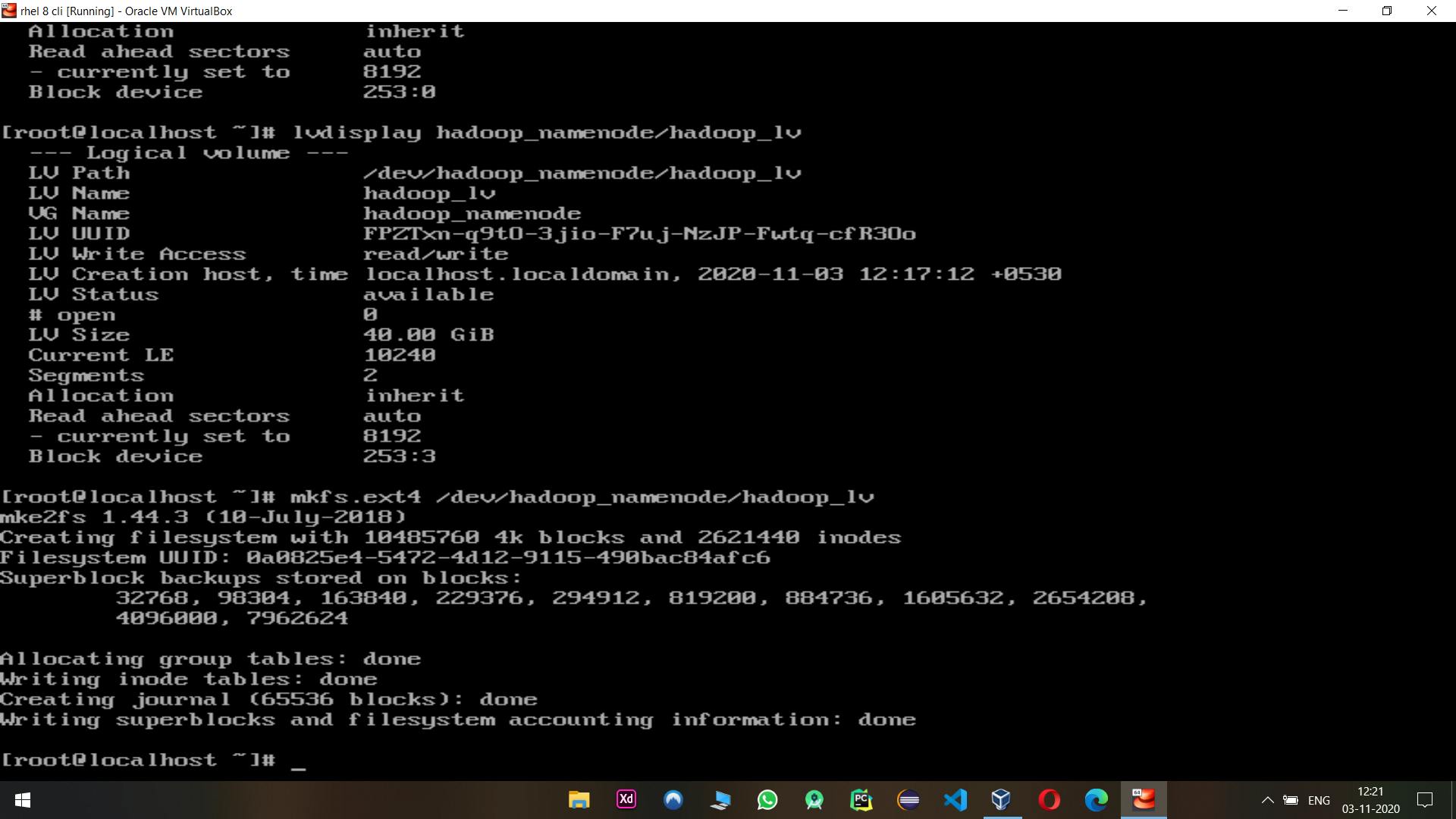

- Now our Logical Volume is created, now we have format or mount it to our main file of hadoop slave to give elasticity

first we format it

cmd:- mkfs.etx4 /dev/hadoop_namenode/hadoop_lv

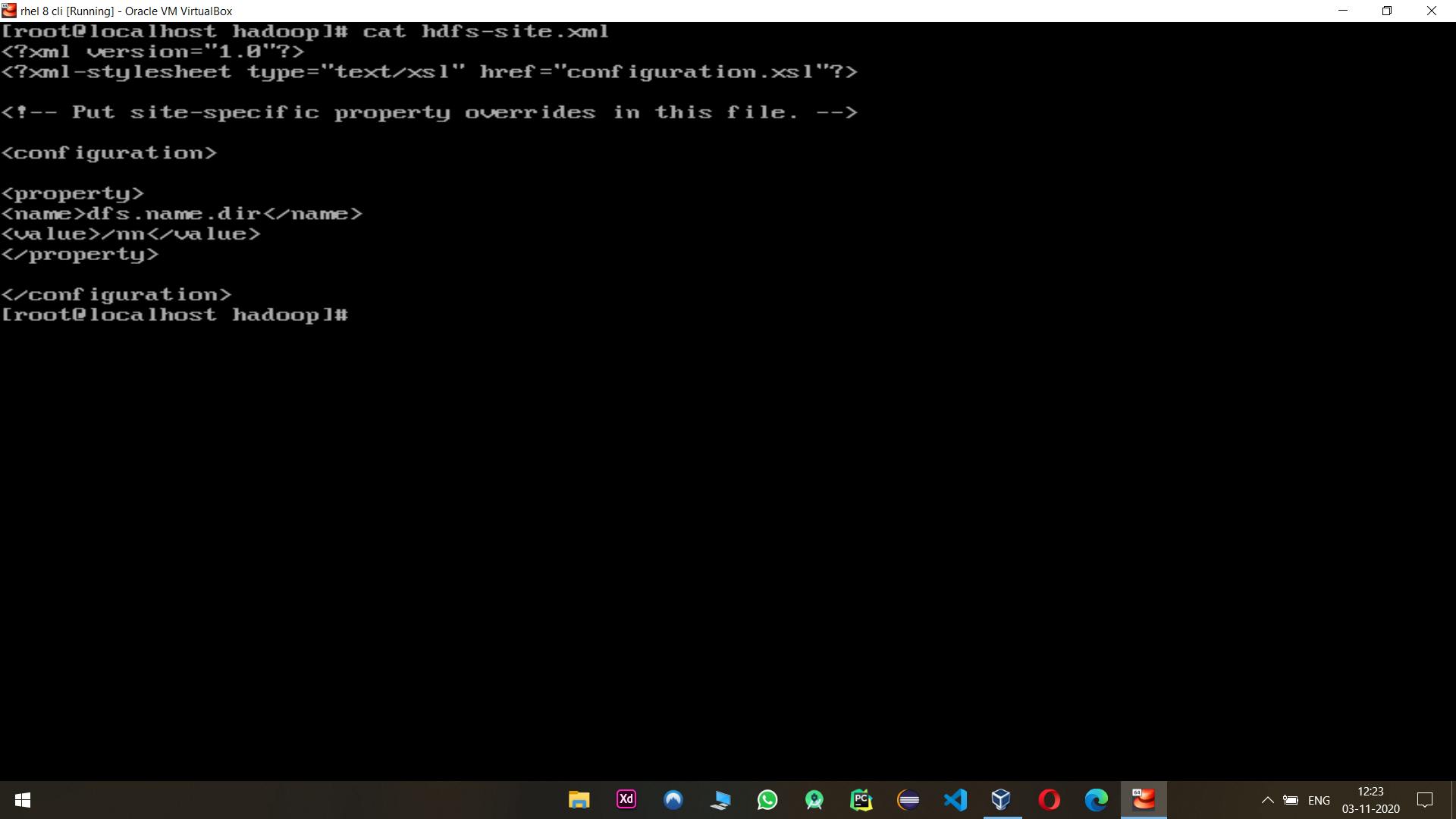

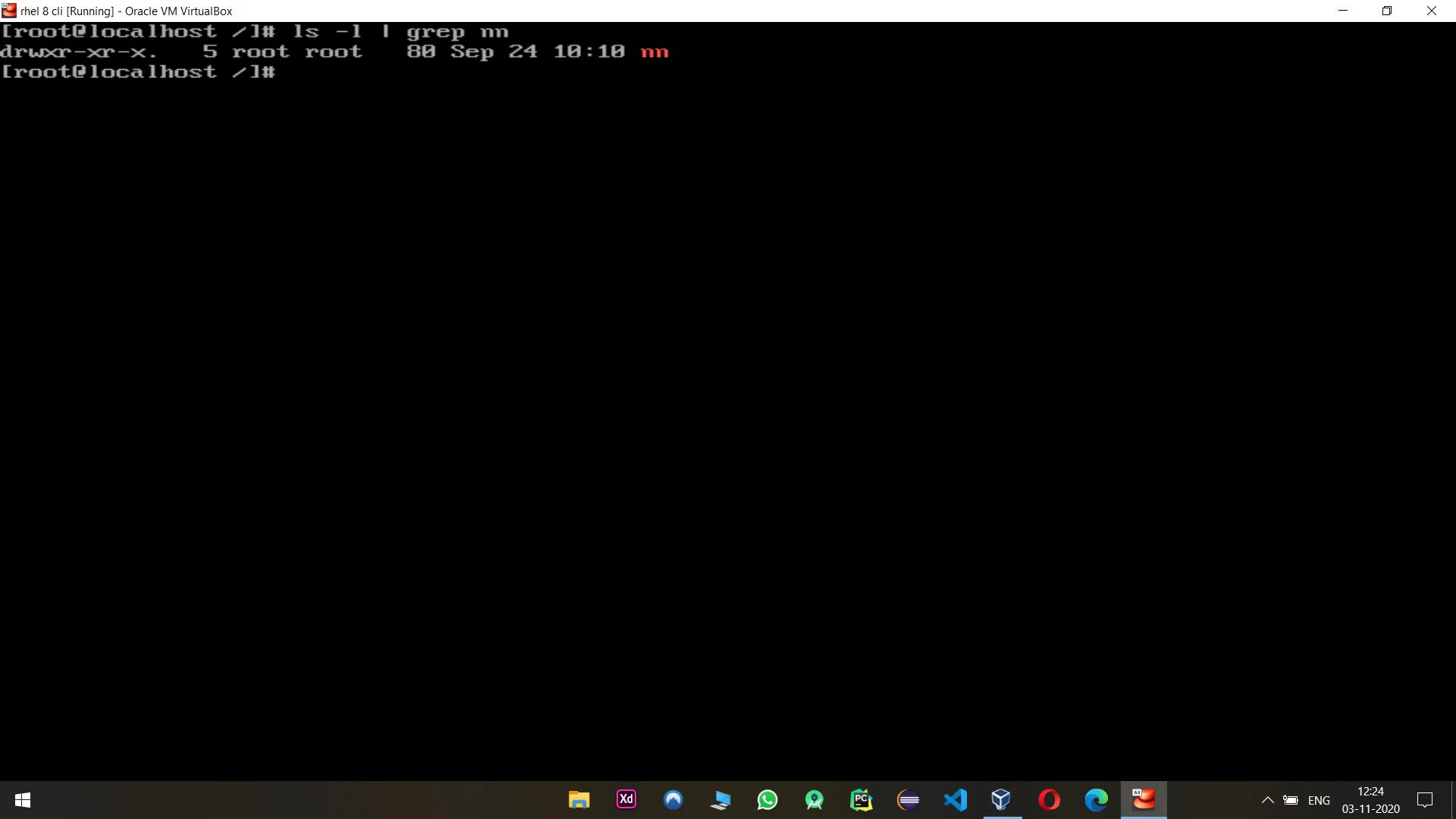

This is my slave node folder /nn

Current size of /nn folder is 40kb

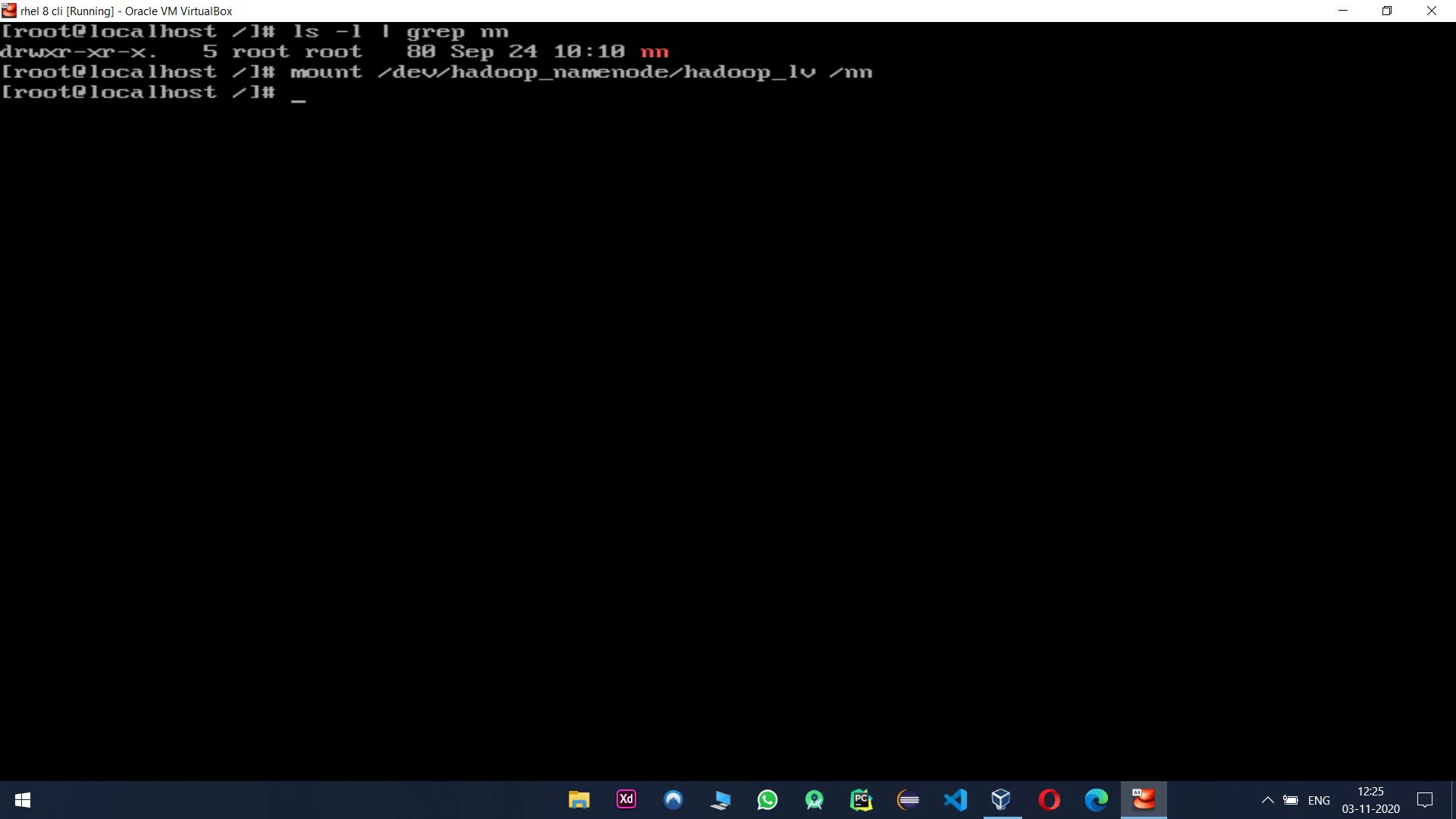

now we mount our drive to /nn

cmd:- mount /dev/hadoop_namenode/hadooop_lv /nn

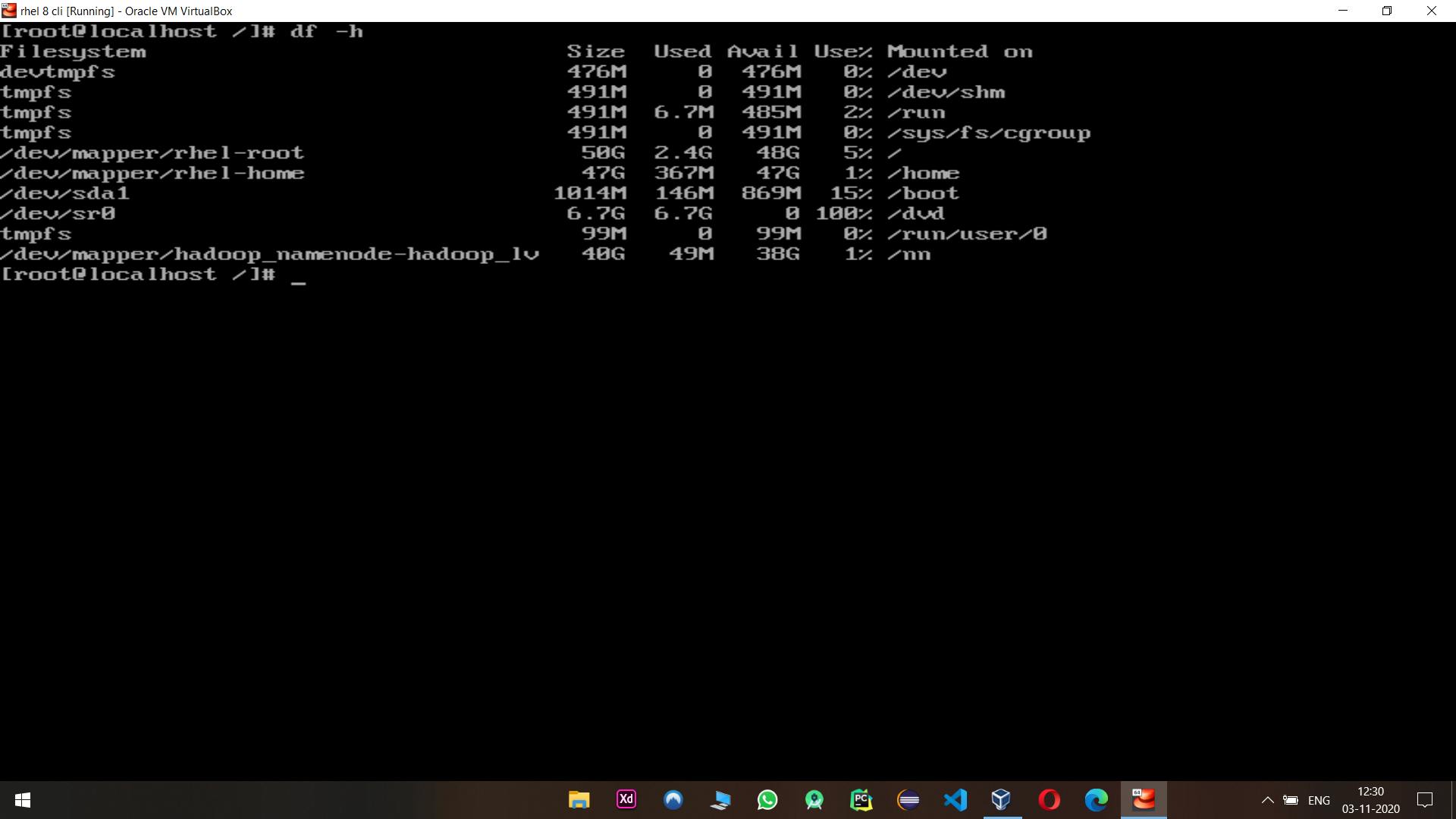

Now Our /nn is become 40GiB

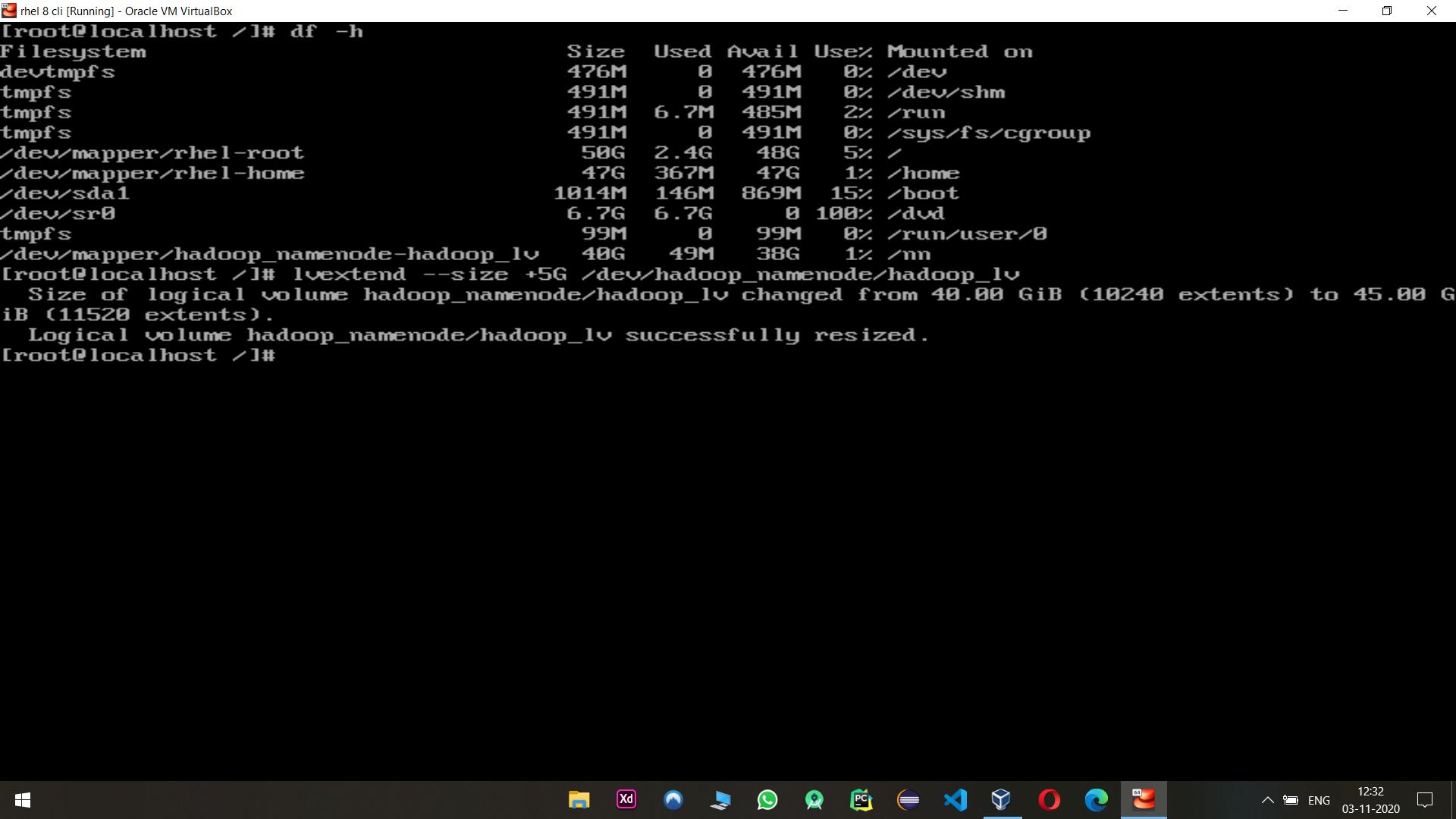

cmd:- df -h

Now our main thing to change the size of slave node on go

to perform this we to extend LV

cmd:- lvextend --size +5G /dev/hadoop_namenode/hadoop_lv

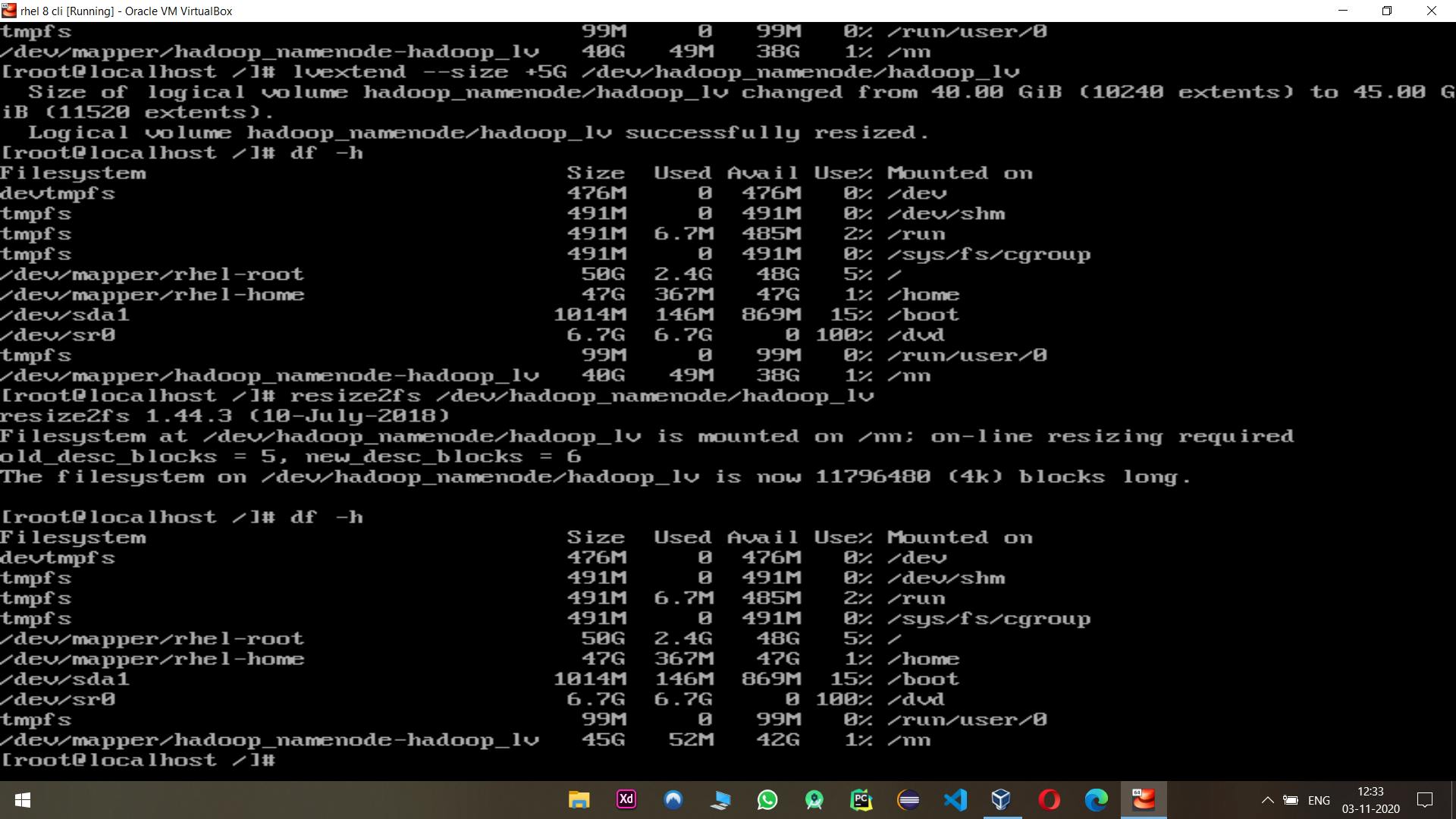

we also have to format the new 5Gib to use this

cmd:- resize2fs /dev/hadoop_namenode/hadoop_lv

Now it's become 45Gib in size, that's it

Thanks you, Make Sure to Like,Share,Comment and Follow